What is Crawl Budget and Why is It Important for SEO?

If you’re aware of the fundamentals of the search engine algorithm, you’d know that crawling is the first step toward generating results from web pages.

Take a minute to think about how Google does its job.

Considering the magnitude of the websites and web pages, crawling is a complex procedure.

Even though Google is upgrading its algorithms all the time, it’s simply impossible for Googlebot to crawl every single webpage. So Google solves this challenge by assigning a crawl budget to each site.

This article will explain what a crawl budget is and why it’s important for your SEO performance.

What is Crawl Budget?

A crawl budget is the total number of pages or URLs the Googlebot crawls in a given timeframe.

Google determines the crawl budget by:

- Crawl Rate Limit: The page speed, website size, errors, and the crawl limit set in Google Search Console.

- Crawl Demand: The popularity of your pages and the frequency at which your content is updated.

The search engine decides what the crawl budget is for your website. Once it’s exhausted, it moves on to assess other sites.

Importance of Crawl Budget for SEO

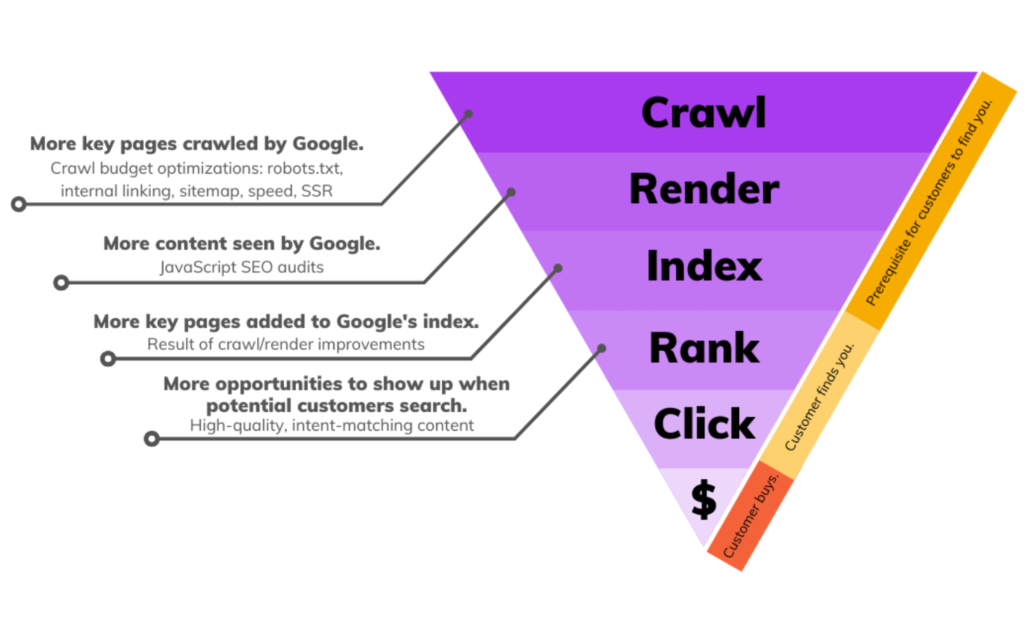

In order to rank your pages, Google needs to index them first.

Source: SearchEngineJournal

If the number of pages on your site exceeds the crawl budget, Google won’t crawl them.

Google has become advanced in crawling a large number of sites in a given timeframe. As mentioned, the website size is one of the main factors determining the crawl budget.

Therefore, if you have a small website, you don’t really need to worry about the crawl budget. Google can easily crawl sites with fewer than a thousand URLs.

A problem arises when you have a website with more than 5K or 10K web pages, or sites that auto-generate pages based on URL parameters. This is when it becomes essential to optimize your crawl budget.

Here are 5 facts about crawl budget for your SEO efforts.

5 Facts About Crawl Budget

1. Page Priority

Not every web page provides the same value to the end-user. For example, an eCommerce site will have thousands of pages, but not every page has the same level of relevance and gets the same number of clicks.

If certain pages get more views, it means they’re more popular and relevant to the users. Google tends to crawl these URLs to keep them fresher in their index.

How to Prioritize Pages?

- Google suggests using internal and external links on priority pages since Googlebot prioritizes those pages.

- You can easily fetch the data for high-value pages from Google Analytics and Search Console.

- You can also practice creating an individual XML sitemap of your key pages categorized by URL type or section. This will help you provide better crawling access for your most valuable pages.

2. Duplicate Content

Since Google already has so much under its bucket, why would it waste time and resources on indexing pages with the same content? It knows that copied pages, internal search result pages, or tag pages won’t provide any real value to the end-user. Hence, Google doesn’t prioritize them.

Duplicate content is a big no if you want to ace your SEO efforts.

How to Limit Duplicate Content?

- Set up redirects for all your domain variants

- Disable pages that are solely dedicated to images

- Make sure Google only indexes relevant categories and tags pages with content

- Make your internal search engine inaccessible to Google by configuring your robots.txt file

3. Crawl Rate Limit

The crawl rate limit decides the crawl budget for a particular website. Googlebot will push the site’s server and based on the response, it will raise or lower the crawl rate limit.

While Google usually decides the crawl rate limit, it gives some power to website owners. This is because if it decides that the crawl rate is high, the Googlebot’s crawl may put too much strain on your server. You need to determine how many resources your hosting server can handle.

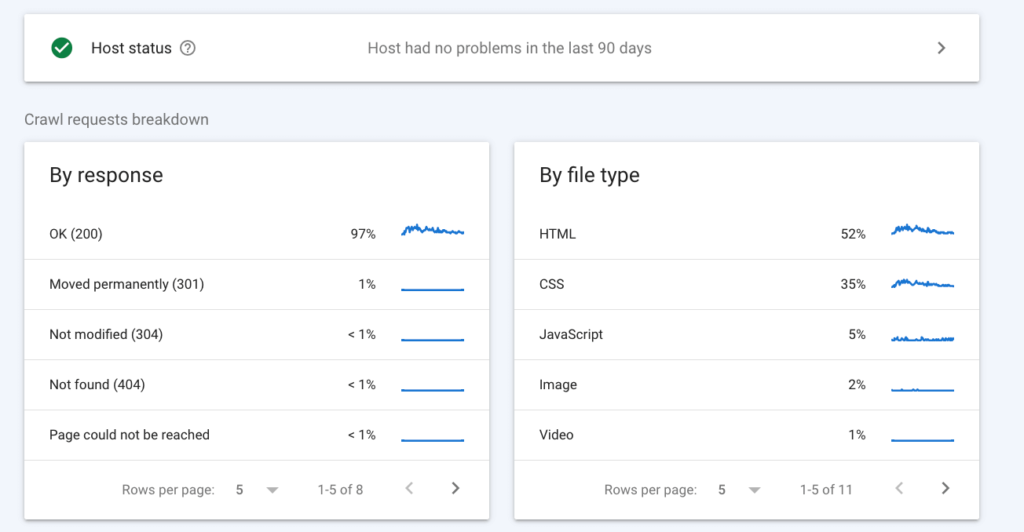

How Do I Check Crawl Status in Google Search Console?

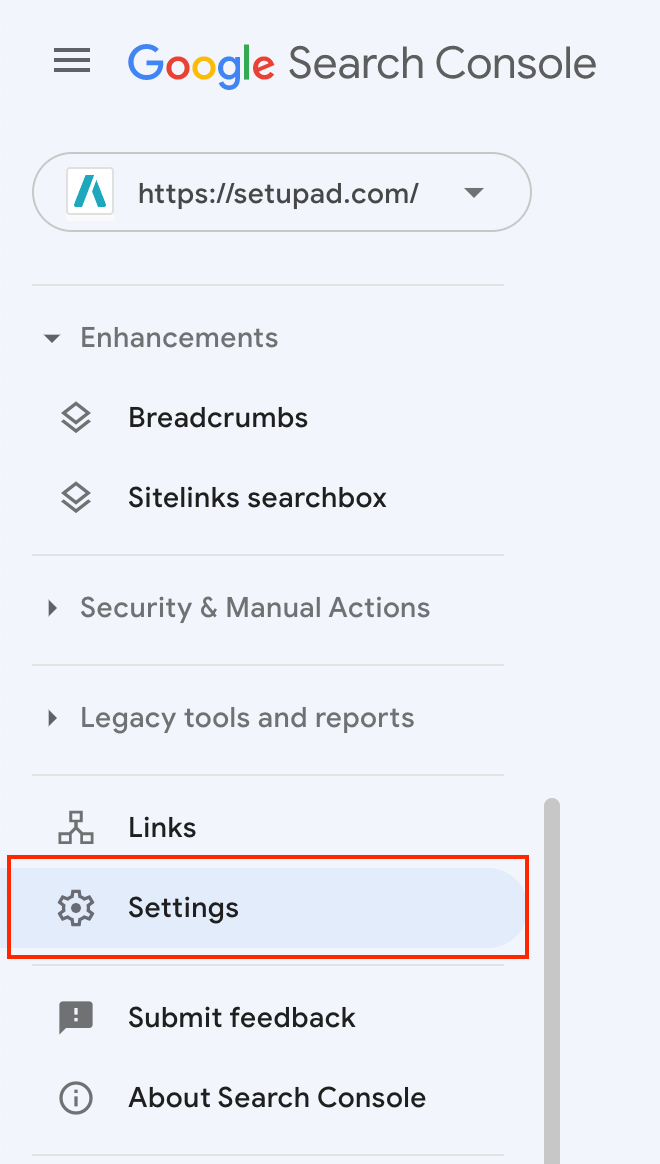

- Log in to your Google Search Console and go to Settings.

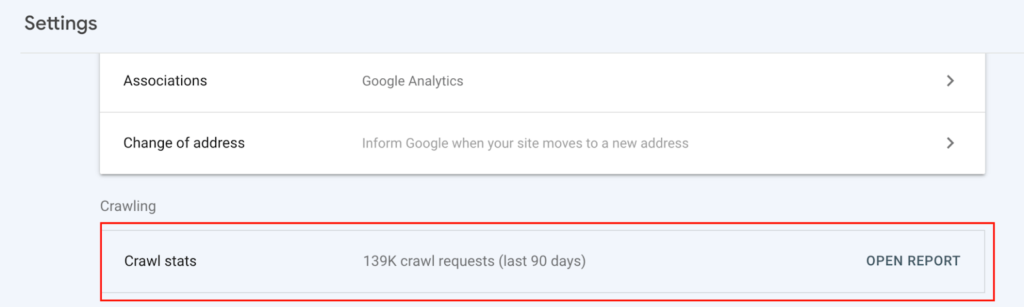

2. Find the Crawl stats report and click Open Report.

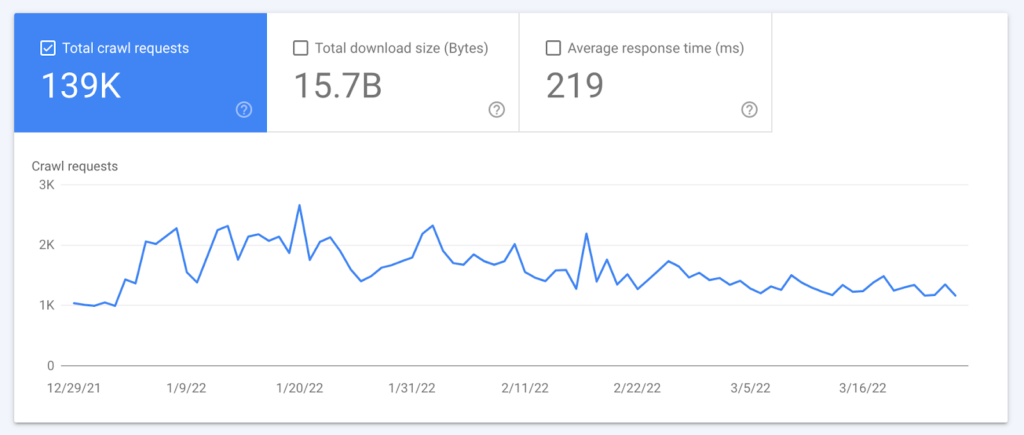

3. You should see a crawling trends chart, host status details, and a crawl request breakdown on the summary page.

How to Adjust Your Crawl Rate Limit?

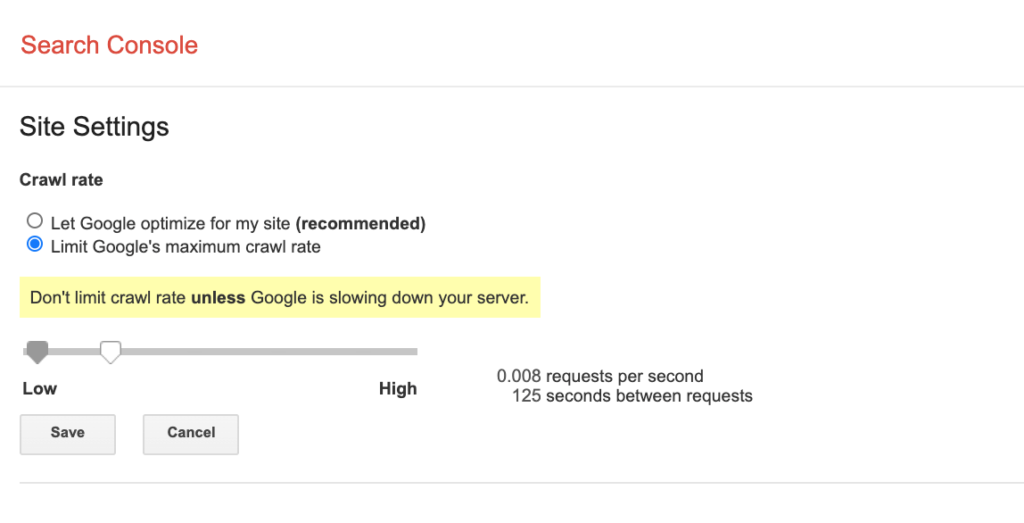

- Go to

https://www.google.com/webmasters/tools/settings?siteUrl=https://yourdomain.com/ - Choose ‘Limit Google’s maximum crawl rate’ option. The new rate will be valid for 90 days.

- If your crawl rate says ‘calculated as optimal’, you can only increase your crawl limit by filing a special request.

Remember to use it with caution since it might result in Google crawling some less important pages. As a result, Google recommends against limiting the crawl rate only unless you are seeing severe server load problems.

4. Page Load Time

Page speed constitutes one of the primary Google ranking factors because it directly impacts user experience with the page, which has become even more important with the Core Web Vitals update. Moreover, the page speed allows Google to crawl more pages.

If the website has many pages with high page latency or they time out, search engines visit fewer pages within their allotted crawl budget. The reasons for this can be unoptimized images and large media files, slow hosting services, too many third-party scripts, among other things.

You need to optimize your page speed so that Google has more time to visit other web pages and index them.

How to Increase Website Loading Speed?

- Try switching to SSR or a dynamic rendering solution. This way you can prerender your content for Googlebot.

- Implement lazy loading for ads

- Fix 404 errors

- Enable compression

- Reduce redirects and redirect chains

5. Content Update

According to Google, the more popular your URLs are on the Internet, the better the chances of Google crawling your site to keep them fresher in their index. Your URL can gain popularity if someone has tweeted it or Google found new links pointing to the content, or it has been updated in the XML sitemap.

But how do you reach that level of popularity?

Through your content.

Google focuses on ranking the results based on content’s relevance, quality, usability, context, etc. Hence, it usually prefers recrawling the sites with high-quality and updated content over the low quality, spammy, and duplicate content.

This makes it important for you to update and refresh the content on your web pages frequently.

How to Refresh Content?

- Update data and stats

- Add original data

- Perform a page-level content gap analysis to find topics that could be added to your content

Finishing Thoughts

While search engines continuously get better at crawling more pages, it takes time. Since you as publisher have little control over crawl budget, the best you can do is to regularly publish high-quality content whilst providing the best user experience.

While Setupad takes care of your website monetization, you can solely dedicate yourself to creating quality content.